|

|

What Are AI Evals And Why Are They a Technical Moat

AI evals are the enterprise moat: systematic evaluation systems that transform probabilistic AI into reliable assets. Learn more about what they are and how to work with them.

Key Takeaways

Evals surpass models as moats by converting probabilistic outputs into auditable, reliable assets for enterprise deployment.

Tiered evaluation rigor (L1-L4) enables progressive validation from syntax checks to business impact simulation.

Hybrid scoring systems combine automated metrics, human review, and LLM judges for compliance-critical use cases.

Probabilistic methods (Monte Carlo, stability scoring) address non-determinism in high-stakes environments.

Evaluation-aware MLOps integrates evals into CI/CD pipelines to enforce governance and enable continuous improvement.

In 2023, I started Multimodal, a Generative AI company that helps organizations automate complex, knowledge-based workflows using AI Agents. Check it out here.

The non-deterministic nature of AI fundamentally disrupts traditional software paradigms. Unlike deterministic systems where unit tests verify fixed outputs, generative AI produces probabilistic results. A single input prompt can yield divergent outputs across model versions, rendering conventional QA inadequate. Binary pass/fail checks fail to capture nuance in natural language outputs or complex completion functions. Industry leaders emphasize this shift:

Garry Tan (YC CEO): "AI evals are emerging as the real moat for AI startups."

Evals operationalize reliability through systematic evaluation. They transform subjective assessments into quantifiable metrics, tracking performance across failure modes, model versions, and production data. For enterprise AI applications, this isn’t just testing. It’s the core process that gates deployment, informs fine-tuning, and turns probabilistic systems into trusted assets.

Let’s learn more about evals and how they can help build robust enterprise AI systems.

Deconstructing Evals: Beyond Basic Testing

What Evals Actually Measure

Enterprise AI evals transcend traditional unit tests by quantifying four critical dimensions:

Accuracy vs. precision tradeoffs: Measuring factual correctness while allowing for contextual nuance in natural language outputs.

Contextual alignment: Verifying outputs comply with business rules, regulatory constraints, and domain-specific logic.

Safety & bias quantification: Detecting toxicity, discrimination, and security vulnerabilities in model behavior.

Cost-performance optimization: Balancing inference cost against quality thresholds for sustainable scaling.

Unlike binary unit tests, systematic evaluation analyzes how different model versions handle edge cases in production data. This requires creating high quality evals that simulate real user input and failure modes.

The Evaluation Taxonomy

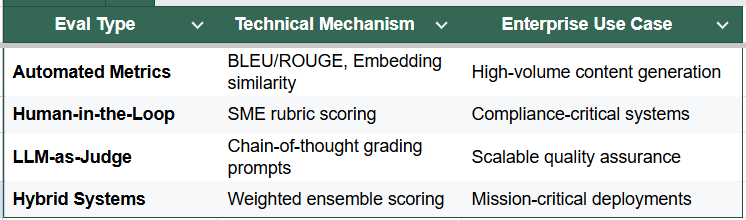

Effective evaluation systems combine techniques based on risk tolerance and use case. When running evals, AI PMs should:

Define test cases covering critical failure modes

Generate synthetic data for edge scenarios

Write prompts using eval templates (e.g., OpenAI Evals format)

Analyze metrics like hallucination rates and compliance scores

For example, using' pip install evals' establishes a baseline, while fine-tuning completion functions against domain-specific JSON datasets elevates precision. The evaluation process culminates in a final report comparing model versions against business KPIs.