|

Roblox CEO Talks AI Game Generation, Child Safety, And Virtual Cash Allowances

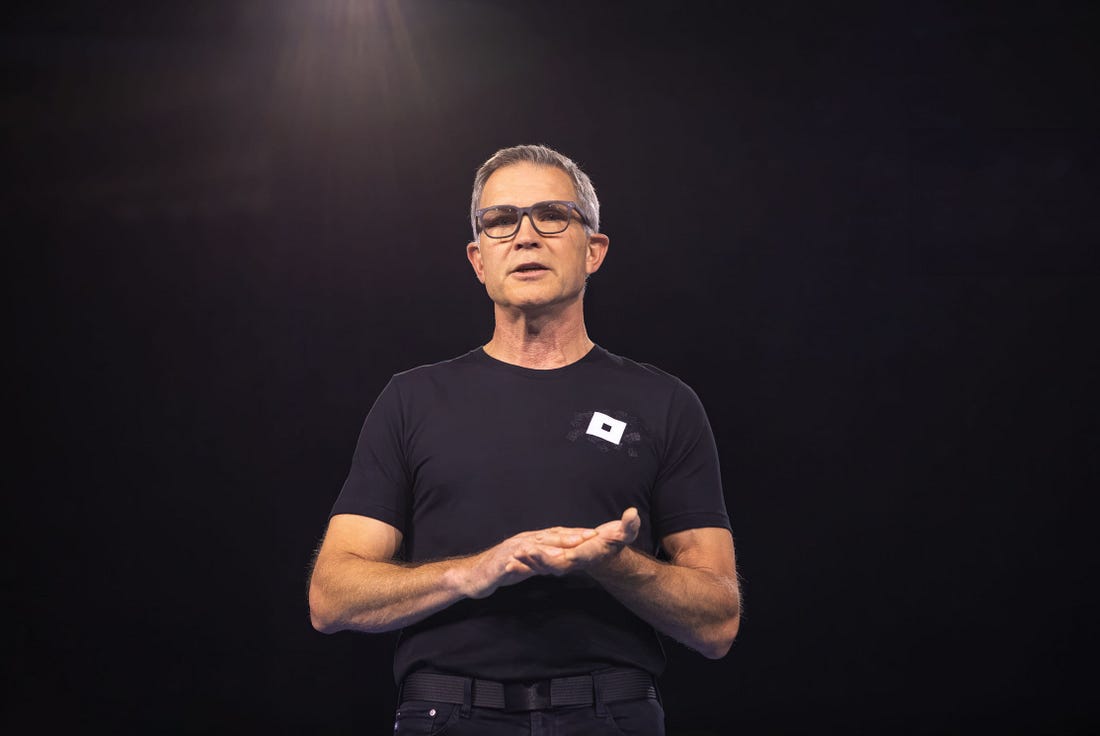

Roblox CEO David Baszucki says he wants both creators and players to be able to prompt experiences out of thin air.

As Generative AI moves from text and image generation to video and 3D rendering, the technology may soon be able to create video games with a few prompts.

That’s what Roblox CEO David Baszucki told me in our our Big Technology Podcast conversation this week. Baszucki wants to leverage the “enormous amount of data” the platform has collected to help both creators and players imagine and build new experiences.

“The North Star is not just object creation,” Baszucki said. “[But], full on experience creation, where one could imagine someone drawing a few sketches of characters, describing a few fun types of gameplay and generat[ing] their own Roblox experience from that.”

In a wide ranging conversation, we cover generative AI’s possibilities for game creation and gameplay, how Roblox responds to a searing research report alleging serious child safety issues on the platform, and whether parents should give kids virtual cash.

You can read the Q&A below, edited for length and clarity, and listen to the full episode on Apple Podcasts, Spotify, or your podcast app of choice.

Alex Kantrowitz: How far is Roblox from a place where you could say: Imagine a world where the characters are dragons, and these are the objectives, and it should be easy for users to play, and then it just creates the game?

David Baszucki: We’re getting closer at remarkably high speed. One of the accelerants of this, and this is true for any AI system, is the more data that's available for training — in a legal, copyright, and TOS compliant way —the more quickly we can build up AI power.

Roblox is unique, in that we have an enormous amount of data: 3D objects, 3D scenes. There's hundreds and hundreds of medieval castles, there's thousands of cars, there's thousands of dragons and we're using that data to create, not an LLM, but a 3D foundational model within our AI team.

We're going to release the first version of it this quarter in several forms. We're going to release it as a open source 3D foundational model for some people to use. We're going to release it in Roblox Studio, so you will be able to say, “make me a good dragon.”

But we're also going to release it for creators to use within their games. We will be testing the very first version of you and I in a Roblox experience, saying, you make your dragon and I make my lion, and we start to see them emerge. Obviously, it's version one, but this is going to happen this quarter.

These are elements of a game. Do you envision actually being able to prompt and play a game?

This is really insightful because elements ultimately add up to the full experience. The full experience of a game, especially on Roblox, is quite rich. We have 3D objects. Unbeknownst to a lot of people, the 3D objects on Roblox actually tend to be physically pretty realistic. We run physics simulations. Cars have wheels. If wheels fall off cars, the cars skid out. We have 3D terrain. We have a lot of code embedded in all of those objects. Roblox game creators embed code at the world level and the object level, and you're exactly right: The North Star is not just object creation, but full on experience creation, where one could imagine someone drawing a few sketches of characters describing a few fun types of gameplay and generate their own Roblox experience from that.

I was on Claude earlier today and said, “Can you create me like a game where a journalist hunts for scoops and they have to ask the characters for a scoop three times, and then at the third time, they will give you a scoop, and you get a point for each scoop.” It actually builds the game. It’s called Scoop Hunter. And you can play it. How far away you think we might be from being able to do that within Roblox?

I don't think we're too far away. And it's fun to imagine the prompts you gave Claude to try those same prompts in a fully immersive, 3D environment. Where you're going with that is exactly right. I like the notion of your game Scoop Hunter. I like the idea of the full physical fabric of that system is a high resolution 3D environment: A 3D environment that can run on any device, that can be multiplayer around the world, and that Fabric then can support that query. We're going to need some NPCs powered by AI. We might need different personalities that think about their scoops. We might need a great town square that you would stylize more as you discussed it, and that'll all come to life. We're not that far away from that.

And that’s coming next year?

I won't say when, but I will say we're going to ship in Q1 both text generation, as well as 3D object generation in Roblox experiences. We would philosophically agree with you that full experience generation is the highest way to think about that. A full experience that's immersive is 3D objects, it’s prompts, it’s code, it’s pulling all of that together.

When we're in a dream, we're generating a 3D environment in real time that's changing very quickly as we move around that dream. In a way, what you're describing is, if it's done in real time, and you're walking around within Scoop Hunter, and you say, “Whoa, morph into this. I want a sci-fi Scoop Hunter with the supreme council of 10,000 scoopers.” You can imagine all of that happening in real time.

You're saying you want the players also to be able to prompt as they're playing, to change the game experience.

We’re think more of a game experience available to the player, not just the traditional game creator. Roblox Studio is a very powerful tool. All of the facilities in Roblox Studio, we want to be available to the game creator; AI creation; interactive modeling; 3D things. There's a blurring between the studio tool and the creator, and what you're talking about could be done by the user in real time.

Just to confirm, your goal is to enable users to prompt an experience out of thin air, like I did with Scoop Hunter. And then, as they’re playing, to shape the experience to their liking via generative AI?

I'm not sure everyone, 24/7, will be running around Roblox, dynamically creating environments all the time, because many of us will be hanging out with our friends, going to a concert. Many of us will go to the creations of other people. Many of us will still like to play hide and seek. But for those who want to use that type of experience or creation, I do think it'll become available.

When you play a game, the thing that makes it so enjoyable is the taste and the talent of the game creator.

That's correct

We play Assassin's Creed in this house, and part of the joy of Assassin's Creed is just the brilliance of the Ubisoft creators who have gone out and built that game. You could have a Normie try to prompt a game, but I’m not bullish they'll be able to replicate what makes playing a game like Assassin's Creed so magical.

When I was younger, I got a Mac Plus. And then the Mac came out. And the Mac had WYSIWYG windows and fonts. All of the sudden everyone thought they could do text layout and typography, and we just saw all these documents with 39 different fonts that look like crap.

There's a little bit of an analogy to that, where font craftsmanship and layout used to be done with movable type, it moved online to Photoshop and other tools, but still, there's craftspeople who are really good at layout. The same is true as we move from oil painting to digital tools and photography. The same will stay for that Assassin's Creed game you mentioned, there will be a lot of taste and a lot of art with AI acceleration.

But do you think that AI can replicate that human taste and brilliance that it takes to create a great game?

I think we're still in the early forms of AI, where arguably, AI is super good at word manipulation, and it's learning shape rotation and shape manipulation and more spatial type and engineering things. So I think we're early.

10,000 years from today, will AI be able to generate games and experiences that are indistinguishable from humans? I could see that. What's interesting, though, is thinking through what does that really mean for us, culturally and as society, between here and that next 10,000 years? There's an optimistic notion that we still like things built by humans. Sometimes we like to buy that designer thing rather than that mechanical thing. I don't know how we go from here, over the next 10,000 years. Ultimately, AI is going to get pretty smart.

Building foundational models is exceptionally expensive. Roblox’s market cap is north of $37 billion, but I want to know how you're able to do it with the resources that you have. How tough this has been?

We have a really large AI team. If you look at our history, we've made some acquisitions with LoomAI and some brilliant talent there. We've been constantly hiring, and we've been building models for four years now, primarily for text and voice safety, for asset moderation. We've gotten really good at building and running AI at Roblox behind the scenes. There's over 200 different models running on our system that are doing so many things at higher quality and higher performance. We have also gotten very good at running relatively complex models efficiently.

DeepSeek is in the news, that rings the bell for us because our voice model runs very efficiently, we're able to use it to help moderate all the voice on our platform. We've open-sourced one of our voice models so other people can use this model for safety and civility.

Bridging that into 3D generation, we also have an amazing amount of data, and a very big data set that is usable for training 3D object generation. We’ve started to put that together. We have enough hardware. We have a great team, and we're going to run this efficiently so that any creator can use it in their game. We're uniquely poised to generate this 3D foundational model and expand on that.

it's all proprietary?

All proprietary

And you're training on actual gameplay to be able to create more gameplay?

We have both static information, which would be 3D objects and shapes. We have something more than static information, which is the code embedded in those objects. Cars on Roblox, we call them 4D cars, not 3D because they have code like; how and when do you open the door? How does the user interface work on that? What are the properties of the wheels and the motors on that car? We have that data to train on as well. How does a car actually function, as opposed to, how does it look good?

The future, though — once again, in a privacy and IP compliant way — is we also will know more and more how people interact with objects. What's a typical way for you or I to walk through a building? How do people interact in a sword fight when they're pretending they're knights? How do people climb on cars so that time based information compliments the static information and starts to beckon more interactive experiences. When I say proprietary, proprietary, plus, of course, the huge open source ecosystem that's out there for tools: open source, complementary things, but all of the 3d stuff, very heavily proprietary.

So what I'm hearing is you're training on the objects, some of the interactions, but not actual gameplay?

Not yet. Training on gameplay isn't necessarily something we would use to create gameplay, but training on gameplay may allow, when you or I, pick your favorite historical figure, whether it's George Washington or the founder of any country — that data may allow us to make more natural avatar simulations as well. It may allow one of your scoop hunters to act more natural, if we know how those various character archetypes work when they've got a scoop and they want to share it with you. We'll see that ultimately drive the avatar simulation as well.

Do you think this type of technology can then be applied to successfully prompt a blueprint, furniture design, some sort of town layout? Given that you understand the 3D nature of objects and how they interact? It’s so far been difficult for AI to do this without hallucinating.

This is really interesting, because there's probably hundreds of thousands of town layouts on Roblox. As we start to use the data of human motion through those town layouts, there's a lot of embedded information. What layouts work? What layouts promote better social cohesion? What town layouts aren't very good? The combination of those existing town layouts, plus how people interact with them does beckon a future of getting useful layouts from what's traditionally thought of as a game engine that could actually be used for real life purposes.

I'll give you one example I saw a few days ago. We asked our 3D foundational model to generate an over the road 18 wheel semi-trailer, and we started constraining it a bit. We started saying, “make it fit in this size.” And, these models are getting smart enough that in a smaller size we'll generate the cab of the trailer without the trailer, and in a larger box, we'll say, “Oh, now you have enough space to generate the trailer.”

I like the idea of a creator on Roblox who's thinking of it as a game using our AI to build a fun game called “build my own house,” have some AI help power it, and have it be a mix of human intuition and AI to build to make the house really functional.

Over in AutoCAD land, are these beautiful 3D architectural programs being driven by AI, physics, and aesthetics. We're all going to be designing our own homes.

Here’s a question from our Discord. One me