|

Level Up Your Business Observability with Custom Metrics (Sponsored)

Business observability offers a centralized view of organizations' telemetry enriched with business context, enabling them to make data-driven decisions.

In this eBook, you'll learn about business observability best practices through real world use cases from Datadog customers. You'll also get an actionable framework for selecting and implementing a business observability strategy yourself.

Disclaimer: The details in this post have been derived from the LinkedIn Engineering Blog. All credit for the technical details goes to the LinkedIn engineering team. The links to the original articles are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them.

One of the primary goals of LinkedIn is to provide a safe and professional environment for its members.

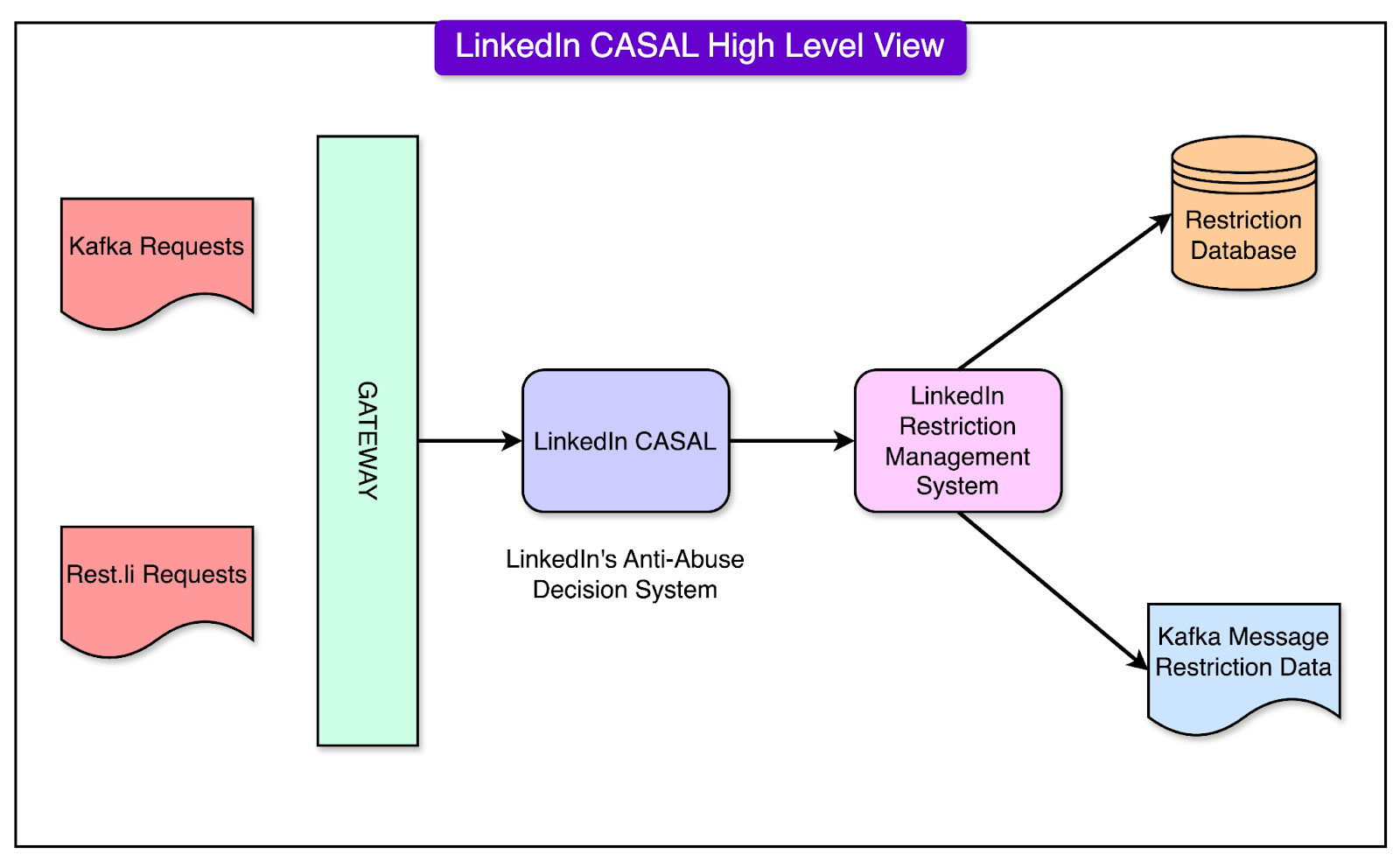

At the heart of this effort lies a system called CASAL.

CASAL stands for Community Abuse and Safety Application Layer. This platform is the first line of defense against bad actors and adversarial attacks. It combines technology and human expertise to identify and prevent harmful activities.

The various aspects of this system are as follows:

ML Models: The ML models analyze patterns in user behavior to detect anything unusual or suspicious. For example, if a user suddenly sends hundreds of connection requests to strangers or repeatedly posts harmful content, the system can flag these activities for review.

Rule-Based Systems: These systems work based on pre-defined rules. Think of them as guidelines that help the platform decide what’s acceptable and what’s not. For instance, certain words or actions that violate LinkedIn’s policies (like hate speech or spam) automatically trigger alerts.

Human Review Processes: Not everything can be left to machines. A dedicated team of human experts steps in to review flagged activities and make decisions on borderline cases.

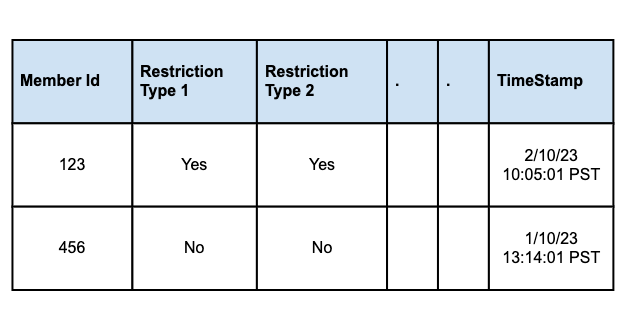

Multi-Faceted Restrictions: Not all harmful activities are the same. This is why LinkedIn uses multi-faceted restrictions. Some restrictions might involve temporarily limiting a user’s actions, like stopping them from sending connection requests for a while. Other situations may require more severe measures, such as permanently blocking an account if it poses a significant threat.

Together, these tools form a multi-layered shield, protecting LinkedIn’s community from abuse while maintaining a professional and trusted space for networking.

In this article, we’ll look at the design and evolution of LinkedIn’s enforcement infrastructure in detail.

Evolution of Enforcement Infrastructure

There have been three major generations of LinkedIn’s restriction enforcement system. Let’s look at each generation in detail.

First Generation

Initially, LinkedIn used a relational database (Oracle) to store and manage restriction data.

Restrictions were stored in Oracle tables, with different types of restrictions isolated into separate tables for better organization and manageability. CRUD (Create, Read, Update, Delete) workflows were designed to handle the lifecycle of restriction records, ensuring proper updates and removal when necessary.

See the diagram below:

|

However, this approach posed a few challenges:

As LinkedIn grew and transitioned to a microservices architecture, the relational database approach couldn’t keep up with the increasing demand.

The architecture became cumbersome due to Oracle’s limitations in handling high query volumes and maintaining low latency.

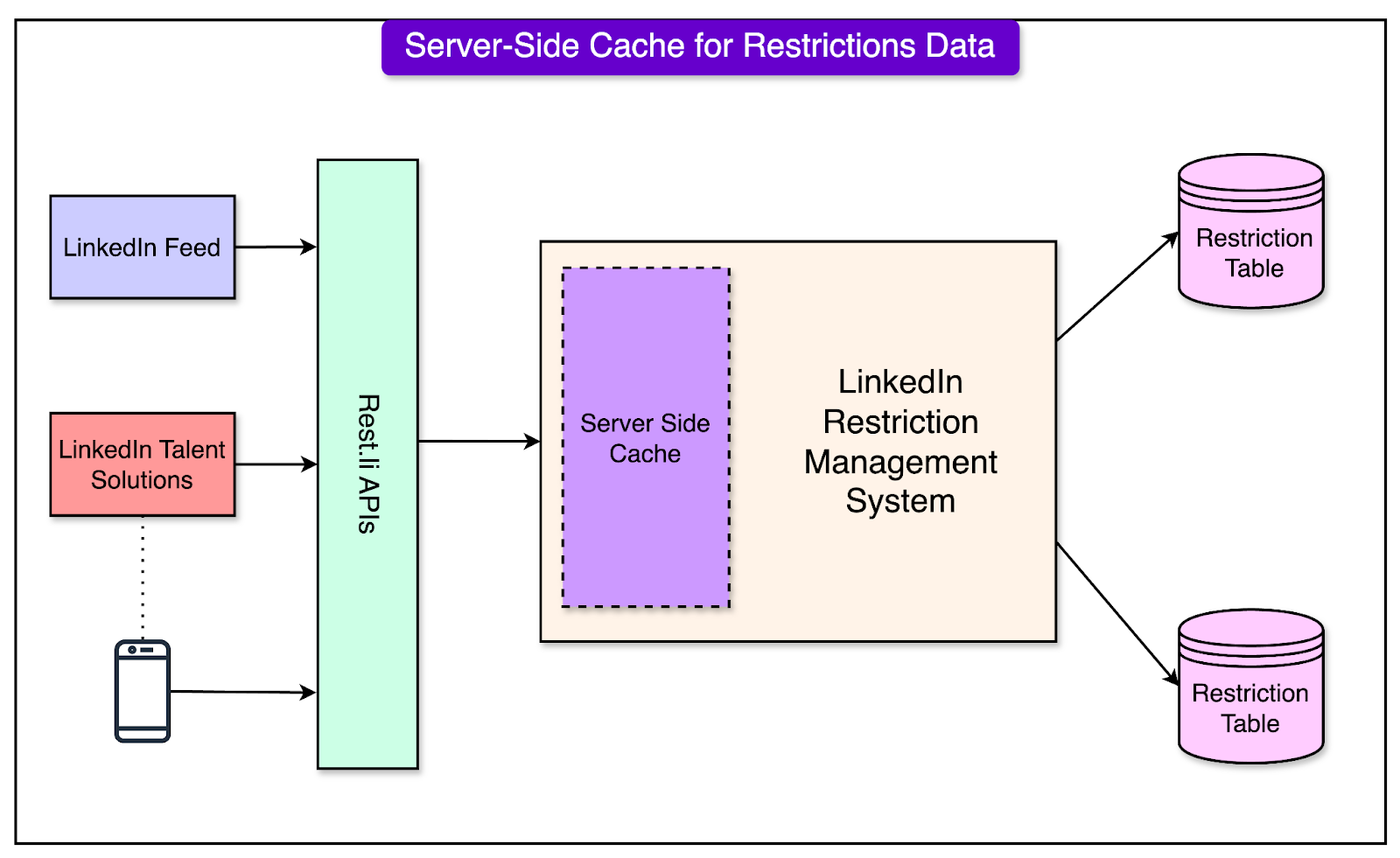

Server-Side Cache Implementation

To address the scaling challenges, the team introduced server-side caching. This significantly reduced latency by minimizing the need for frequent database queries.

A cache-aside strategy was employed that worked as follows:

When restriction data was requested, the system first checked the in-memory cache.

If the data was present in the cache (cache hit), it was served immediately.

If the data was not found (cache miss), it was fetched from the database and asynchronously updated in the cache for future requests.

See the diagram below that shows the server-side cache approach:

Restrictions were assigned predefined TTL (Time-to-Live) values, ensuring the cached data was refreshed periodically.

There were also shortcomings with this approach:

The server-side cache wasn’t distributed, meaning individual hosts had to manage their caches.

The approach worked well for low-traffic scenarios, but struggled under high cache-hit demands, necessitating further improvements.

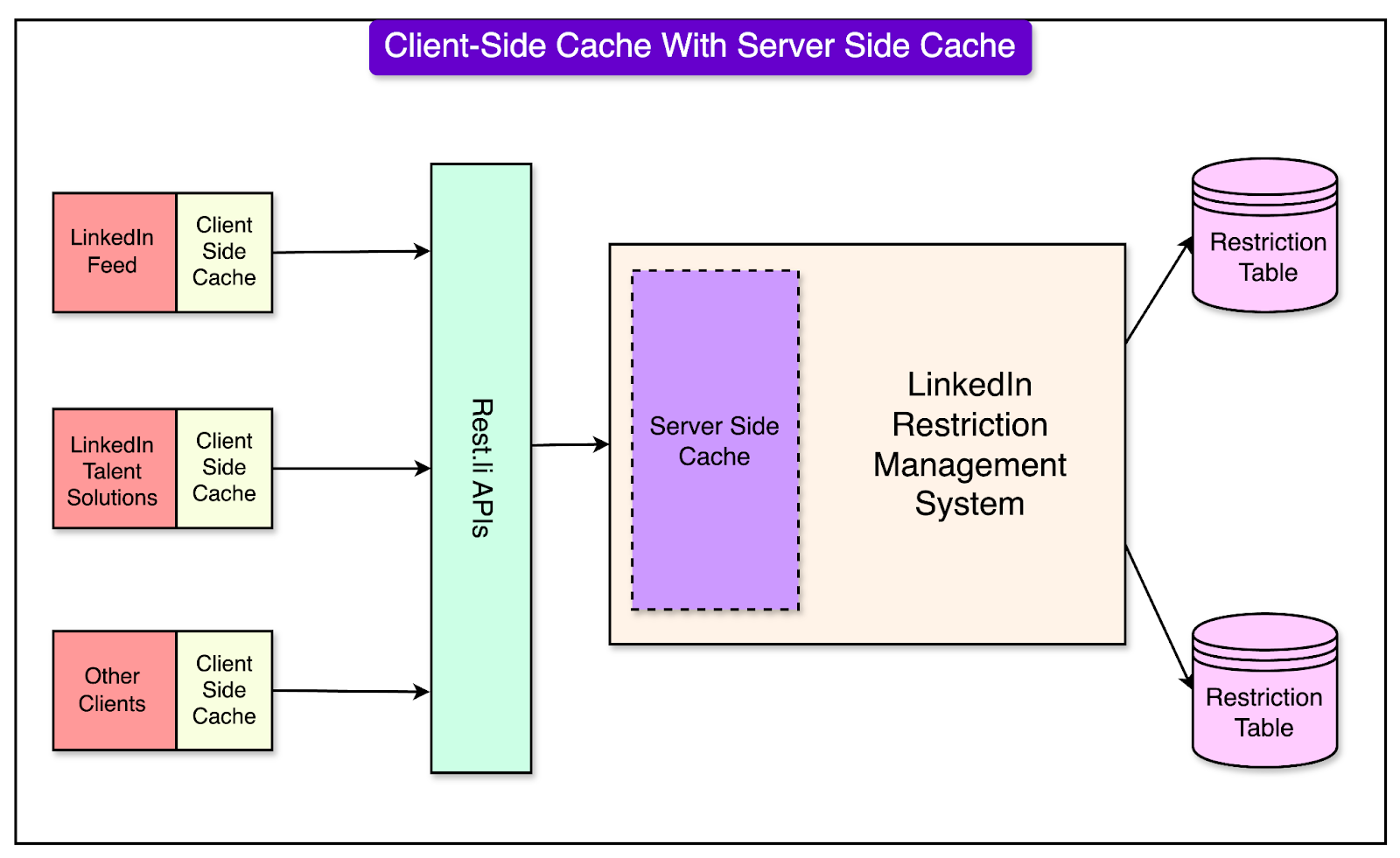

Client-Side Cache Addition

Building on the server-side cache, LinkedIn introduced client-side caching to enhance performance further. This approach enabled upstream applications (like LinkedIn Feed and Talent Solutions) to maintain their local caches.

See the diagram below:

To facilitate this, a client-side library was developed to cache the restriction data directly on application hosts, reducing the dependency on server-side caches.